Why Multi-LLM Optimization Matters

As AI assistants like ChatGPT, Gemini, Claude, and Perplexity become primary sources of information, brands face a new challenge: ensuring content is recognized across multiple LLM platforms. Each AI model has its own algorithms, priorities, and methods for extracting and presenting information. A page optimized for one LLM may not automatically perform well on another.

Multi-LLM optimization ensures that your content is consistently visible, accurately represented, and cited across all major AI assistants. This strategy is crucial for brands aiming to establish authority in an AI-driven ecosystem.

Core Principles of Multi-LLM Optimization

- Consistency Across Content – Maintain consistent terminology, entity definitions, and structured formatting. AI models rely on clarity and uniformity to interpret content correctly.

- Structured Data Implementation – Use schema markup, tables, and clear headings to make content extractable. Proper structured data increases the likelihood that multiple LLMs will interpret your content accurately.

- Topical Authority Clusters – Organize content into clusters that explore a topic comprehensively. Pillar pages supported by related subpages help LLMs understand context and relationships between concepts.

- Monitor and Adjust – Track performance across different AI platforms. Insights into which pages are cited and recognized allow brands to refine content for maximum cross-platform visibility.

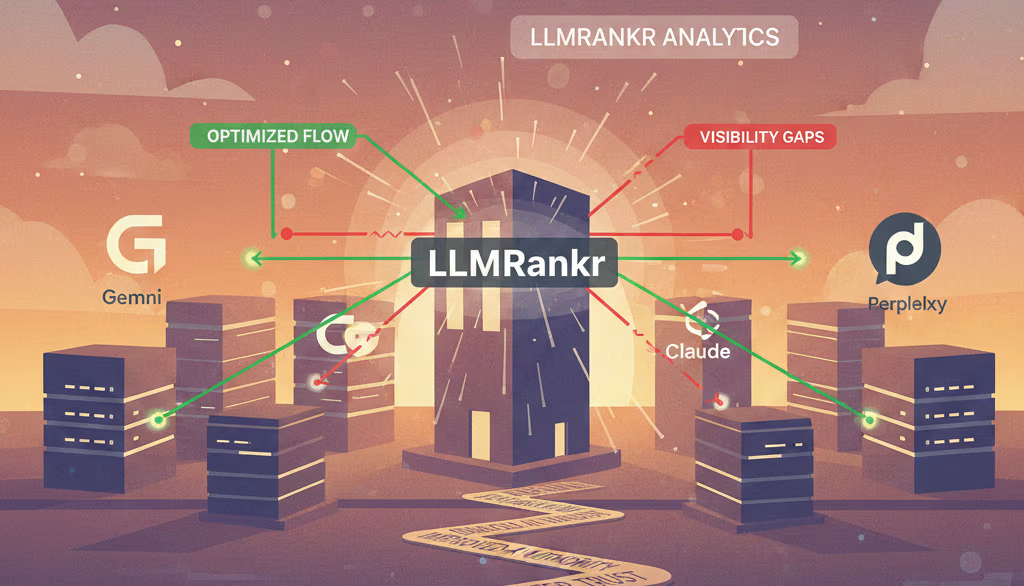

How LLMRankr Supports Multi-LLM Optimization

LLMRankr is designed to help brands navigate the complexities of multi-LLM optimization. Its analytics provide insights into:

- Which LLMs are citing your content most frequently.

- How well entities are recognized across platforms.

- Gaps where certain content is underrepresented or misinterpreted.

- Opportunities to improve structure, clarity, and AI extractability.

By leveraging LLMRankr, brands can ensure that their content is not just optimized for one AI assistant but performs consistently across all major platforms. This approach minimizes visibility gaps and maximizes the chances of being cited in AI-generated answers.

Strategies for Continuous Improvement

Optimizing for multiple LLMs is an ongoing process. Brands should:

- Regularly update content to reflect new data, terminology, and user intent.

- Monitor competitor AI visibility to identify gaps and opportunities.

- Use analytics to iterate on entity definitions, structured data, and content clusters.

- Test content performance across multiple AI platforms to ensure consistent recognition.

Benefits of Multi-LLM Optimization

Effective multi-LLM optimization results in:

- Broader AI visibility and more frequent citations.

- Improved brand authority and credibility across platforms.

- Enhanced user trust as AI consistently delivers accurate information.

- Reduced risk of content being overlooked due to differences in AI interpretation.

Conclusion

In an AI-first landscape, single-platform optimization is no longer enough. Multi-LLM optimization ensures that your brand is consistently recognized, cited, and trusted across all major AI assistants. Tools like LLMRankr make this complex task manageable, providing actionable insights and helping brands maintain authority in a rapidly evolving digital ecosystem.